|

I am a Research Scientist at Meta

I completed my PhD at University of Southern California, co-advised by Prof. Joseph J. Lim and Prof. Erdem Bıyık. I was fortunate to intern at Meta Reality Labs, Microsoft Research Montreal and Naver AI, Seoul. Before joining USC, I spent two years in Seoul, working at Samsung Research Korea. Earlier, I graduated from IIT Delhi, where I worked under the guidance of Prof. Sumeet Agarwal and Prof. Rajakrishnan Rajkumar. Email | Twitter | CV | Google Scholar | LinkedIn |

|

|

|

|

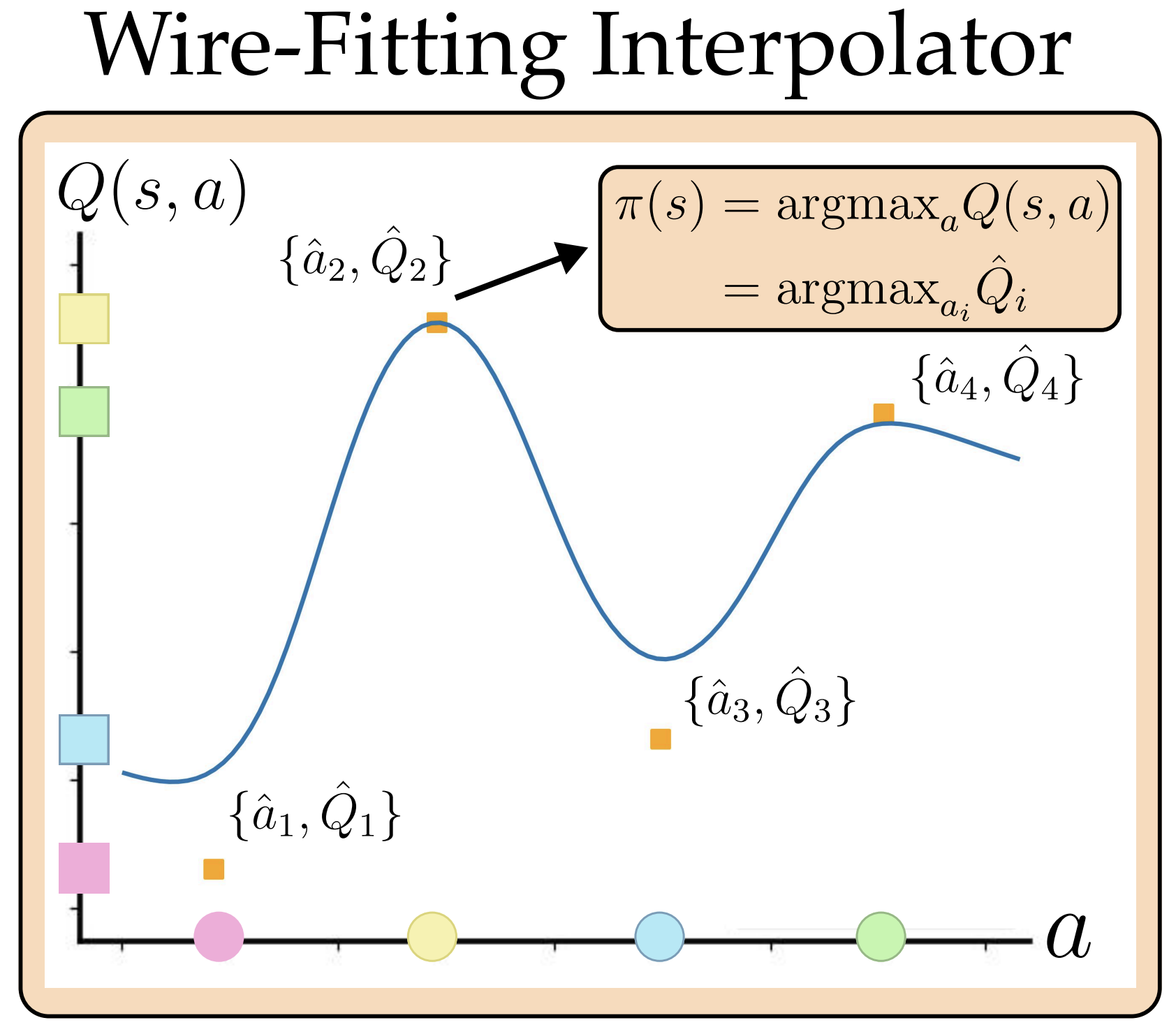

Yigit Korkmaz*, Urvi Bhuwania*, Ayush Jain†, Erdem Bıyık† NeurIPS 2025 Actor-free Q-learning in Continuous Action Spaces by learning a "Wire-fitted Q-function". arXiv | Code |

|

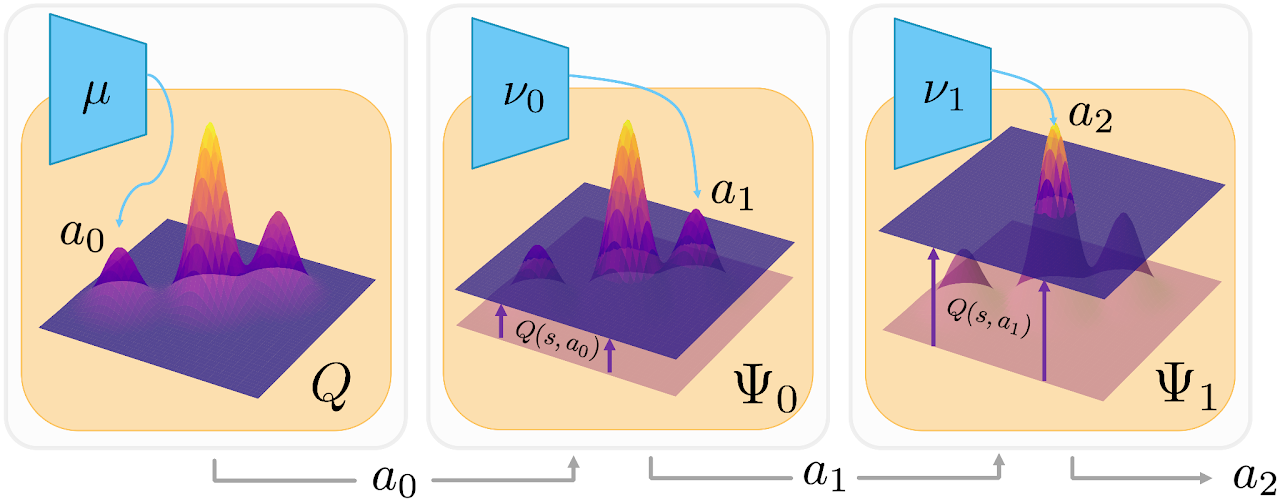

Ayush Jain, Norio Kosaka, Xinhu Li, Kyung-Min Kim, Erdem Bıyık, Joseph J. Lim RLC 2025, Reinforcement Learning Conference Outstanding Paper Award on Empirical Reinforcement Learning Research We identify that TD3 gets stuck in local optima in tasks with complex Q-functions and propose a new actor architecture to find better optima. Paper | arXiv |

|

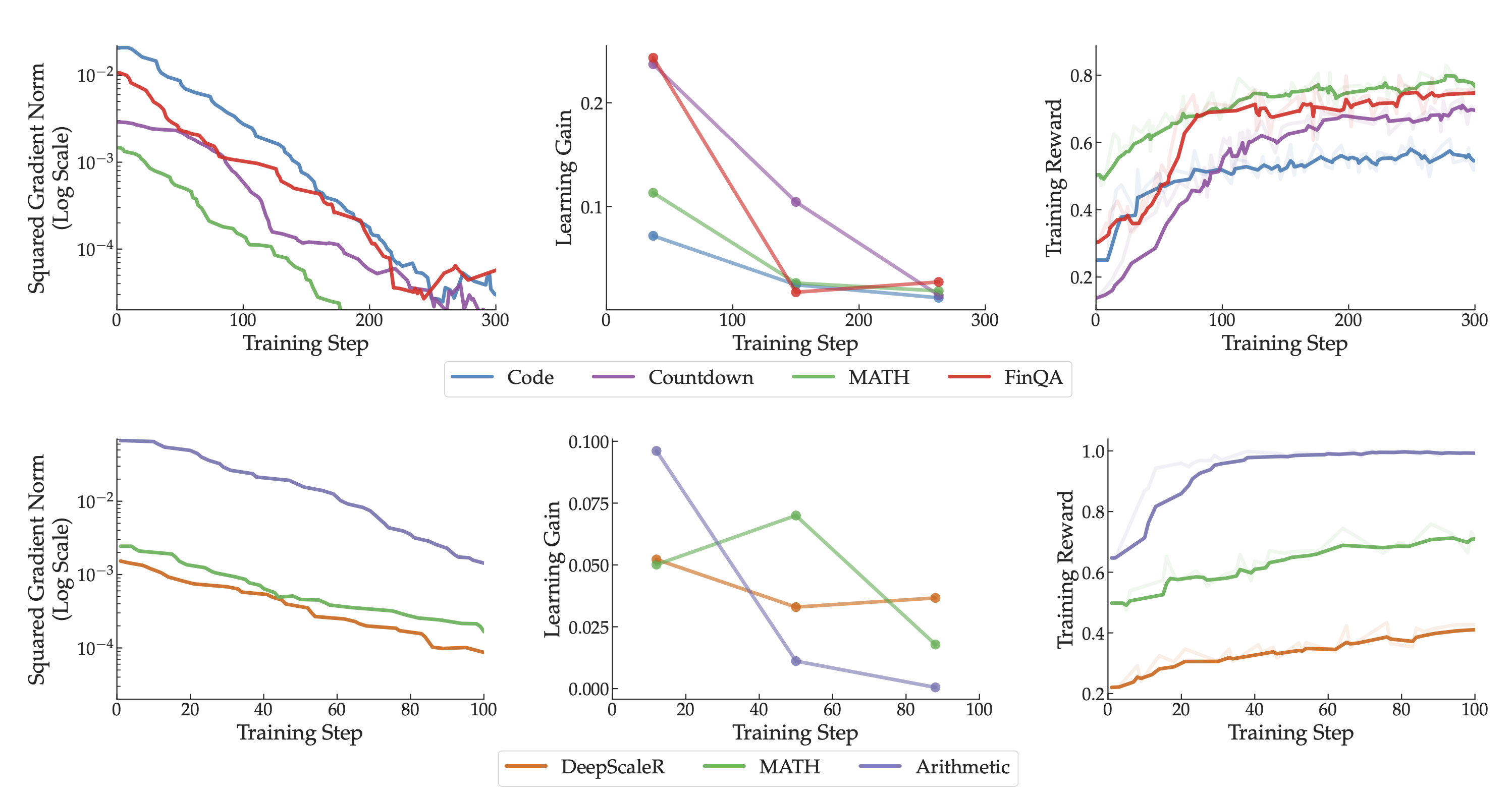

Runzhe Wu, Ankur Samanta, Ayush Jain, Scott Fujimoto, Jeongyeol Kwon, Ben Kretzu, Youliang Yu, Kaveh Hassani, Boris Vidolov, Yonathan Efroni EACL 2026 Findings We find that in RL post-training of multi-task LLMs, certain tasks produce much larger gradients, which biases model updates toward those tasks, even though these larger gradients don't translate to greater learning gains. arXiv |

|

Xinhu Li, Ayush Jain, Zhaojing Yang, Yigit Korkmaz, Erdem Bıyık Preprint Expert demonstrators are often constrained due to indirect control, setup restrictions, and hardware safety. We propose an inverse RL method to learn from such constrained demonstrations and find shorter trajectories to the goal. arXiv | Project Page |

|

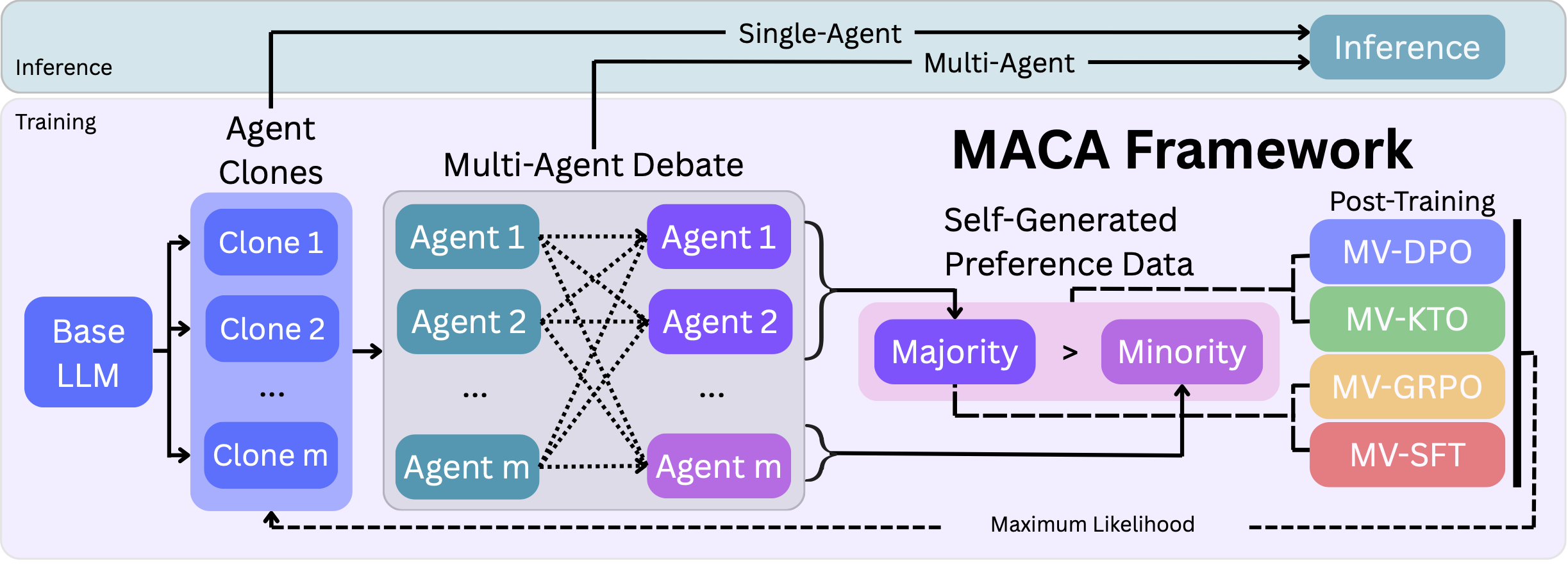

Ankur Samanta, Akshayaa Magesh, Youliang Yu, Runzhe Wu, Ayush Jain, Daniel Jiang, Boris Vidolov, Paul Sajda, Yonathan Efroni, Kaveh Hassani Preprint Language models learn to maintain consistent answers across diverse reasoning paths and ground arguments in peer reasoning by reinforcing their own debate consensus, driving reasoning self-improvement. arXiv | Code |

|

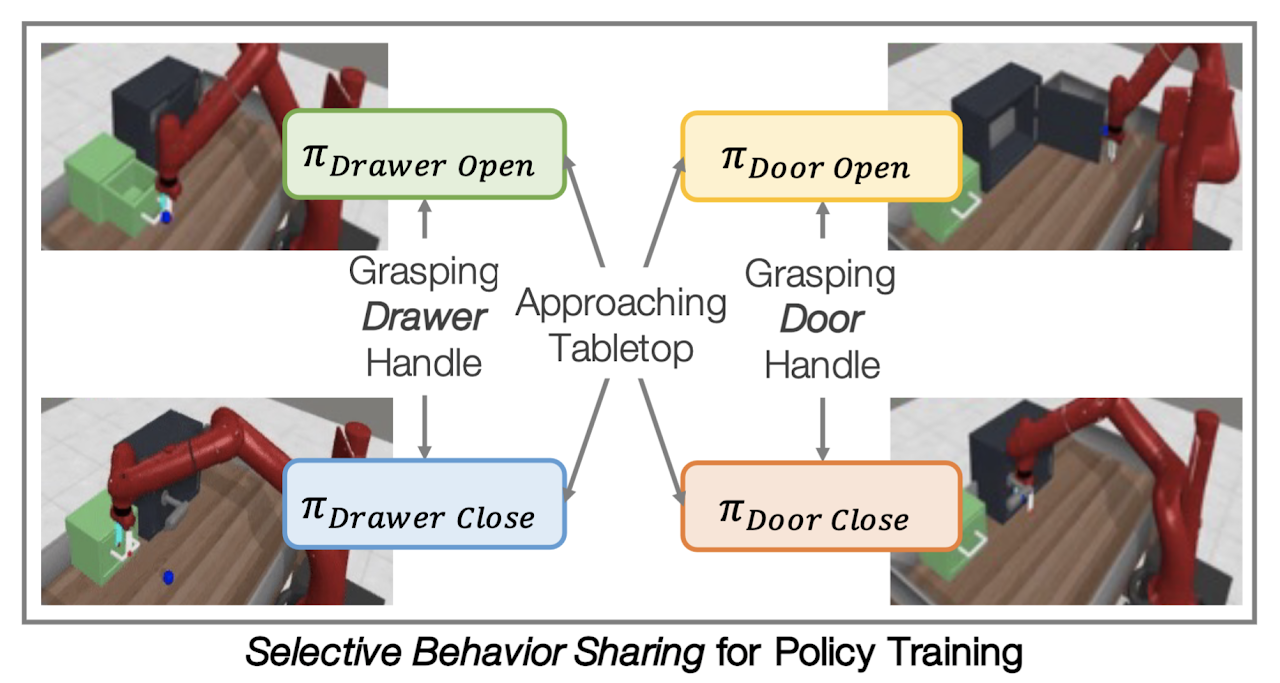

Grace Zhang*, Ayush Jain*, Injune Hwang, Shao-Hua Sun, Joseph J. Lim ICLR 2025 We introduce behavior-sharing for efficient multitask reinforcement learning, complementary with parameter-sharing and data-sharing. Paper | arXiv | Project Page | Code |

|

Ayush Jain*, Norio Kosaka*, Kyung-Min Kim, Joseph J. Lim ICLR 2022 For optimal decision-making under a varying action space, we learn the relations between the available actions using a graph-attention network based policy architecture. Paper | Project Page | Code | Talk |

|

Ayush Jain*, Andrew Szot*, Joseph J. Lim ICML 2020 Our proposed RL framework enables agents to solve sequential decision-making tasks even when the available actions (tools or skills) have not been seen before. Paper | Project Page | arXiv | Code | Talk | Environment |

|

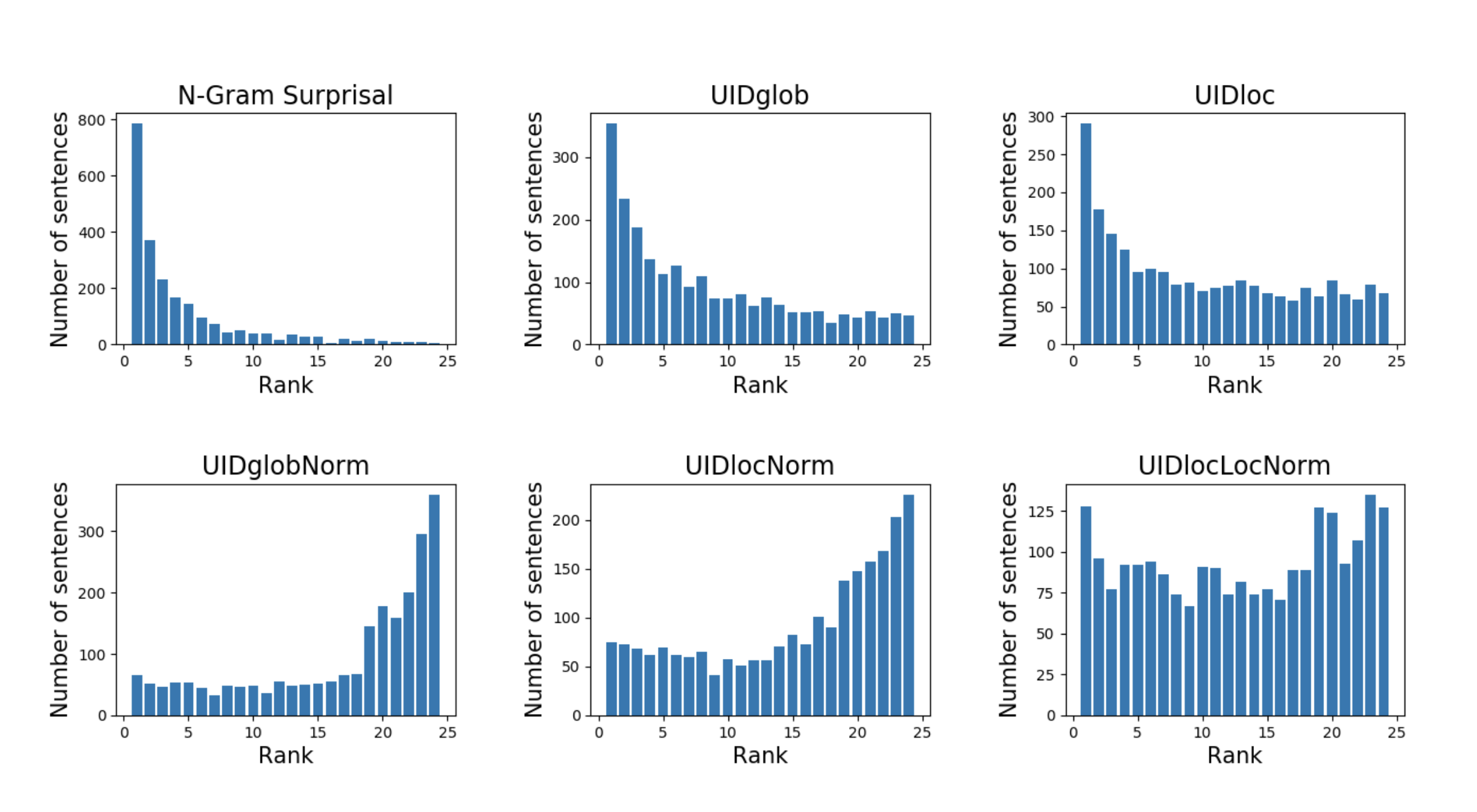

Ayush Jain*, Vishal Singh*, Sidharth Ranjan*, Rajakrishnan Rajkumar, Sumeet Agarwal COLING 2018 Workshop on Linguistic Complexity and Natural Language Processing This work investigates the extent to which word order choices in Hindi language are influenced by the drive to minimize the information variance in a sentence. |

|

|

|

Teaching Assistant (USC):

|

|

|

|

|

Credits to the Coolest template! |